Automate SEO with the SERP API! Learn how to collect and analyze Google search data using DataForSEO API and BigQuery. Boost rankings, track competitors, and make data-driven decisions effortlessly.

In today’s data-driven world, understanding your website’s performance in search engine results pages (SERPs) is crucial. This blog post dives into a powerful approach using the SERP API to automate Google search result collection and analysis with the DataForSEO API and BigQuery. We’ll explore how the SERP API works, unpack its functionalities with code snippets, and discuss potential use cases for businesses seeking valuable insights from their search rankings. Whether you’re tracking keyword rankings, analyzing competitors, or optimizing content, applying data science principles to SEO can provide a scalable and efficient strategy for success.

Table of Contents

- Understanding DataForSEO for Google SERP API

- Code Functionality – A Deep Dive into SERP API Automation

- Error Handling and Rate Limiting in SERP API Workflows

- Deploying SERP API Automation on Google Cloud Run

- Scheduling SERP API Tasks with Google Cloud Scheduler

- Potential Business Use Cases for SERP API Data.

- Conclusion

- Complete Code

Understanding DataForSEO for Google SERP API

DataForSEO is a comprehensive SEO toolkit that offers various features, including a robust Google SERP API. This API lets you programmatically retrieve organic search results for specific keywords, locations, and devices.

Here are some key functionalities of the SERP API used in the code:

- Task Posting (taskPostUrl): This endpoint lets you submit tasks to retrieve SERP data for your desired keywords, locations (coordinates), and devices.

- Task Getting (taskGetUrl): Once a task is submitted, you can use this endpoint to check its status and retrieve the results when it’s complete.

- Ready Tasks (taskReadyUrl): This endpoint helps you identify tasks that have finished processing and have results ready for download.

The provided code offers an automated workflow for fetching Google search results using the SERP API and storing them in BigQuery, Google’s cloud data warehouse. Let’s break down the code’s key functionalities with snippets.

Code Functionality – A Deep Dive into SERP API Automation

- Configuration (Initial Lines):

This section defines essential variables, such as your BigQuery project ID, dataset ID, and the path to your Google Cloud service account key file. You can also set your security keys in the env file.

- Data Retrieval and Processing (Functions):

- processAPIDatatoBigQuery: This primary function orchestrates the entire process. It reads keywords, locations, and devices from a Google Sheet, generates tasks for the SERP API, retrieves results, and stores them in BigQuery.

- fetchandProcessTaskResult: This function retrieves search results for a specific task ID, processes the data, and stores it in BigQuery tables. It also leverages a coordinateToCityState map to enrich the data with city and state information (optional).

- generateTasks: This function takes location coordinates, keywords, and devices as input and generates a list of tasks for the SERP API. Each task specifies a keyword, location, and device combination for which you want to retrieve search results.

- BigQuery Interactions (Functions):

- createTablesIfNotExist: This function checks if the required tables exist in your BigQuery dataset (search_result, items, links, item_items) and creates them if necessary, along with their respective schemas.

- insertData: This function takes retrieved search data, extracts relevant information (keywords, rankings, URLs, etc.), and inserts it into the corresponding BigQuery tables.

Error Handling and Rate Limiting in SERP API Workflows

A robust system should anticipate potential errors and implement strategies to handle them gracefully. The provided code incorporates error-handling mechanisms and rate limiting to ensure the script’s reliability and compliance with API usage limits. The user can only do 2000 API requests ( Post / Get ) on a single route per account.

1. Error Handling

The code utilizes try-catch blocks to encapsulate potential error points. For instance, when posting or fetching tasks, the code catches exceptions and logs errors for further analysis.

Additionally, the fetchWithRetry function is employed to handle transient network errors or SERP API rate limiting issues by retrying failed requests a specified number of times with increasing delays.

2. Rate Limiting

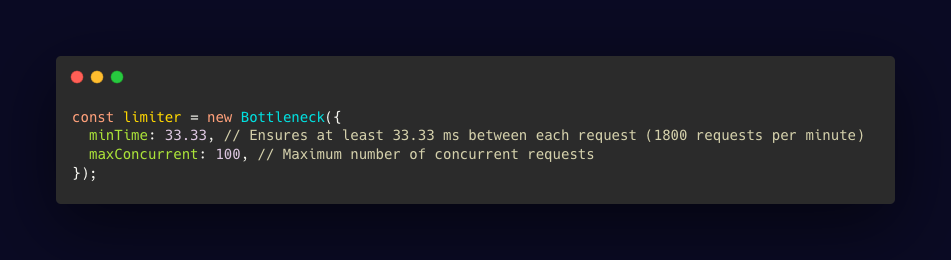

To prevent overwhelming the SERP API, the code employs the Bottleneck library to implement rate limiting. The limiter object ensures that a minimum time of 33.33 milliseconds passes between each request, adhering to the API’s usage limits (2000 requests per minute per account).

By incorporating error handling and rate limiting, the code enhances its resilience and reliability in handling API responses and potential issues.

Understanding the Code’s Core Functionalities

Before diving into deployment and scheduling, let’s recap the core functionalities of the code:

- Data Retrieval: Fetches search results from DataForSEO API based on specified keywords, locations, and devices.

- Data Transformation: Processes the raw data into a structured format suitable for BigQuery.

- Data Storage: Inserts processed data into BigQuery tables for analysis and reporting.

- Error Handling: Implement error-handling mechanisms to ensure robustness and reliability.

- Rate Limiting: Controls the frequency of API requests to comply with DataForSEO’s usage limits.

Deploying SERP API Automation on Google Cloud Run

Google Cloud Run is an excellent choice for deploying this application due to its serverless nature, scalability, and ease of management. Here’s a basic outline of the deployment steps:

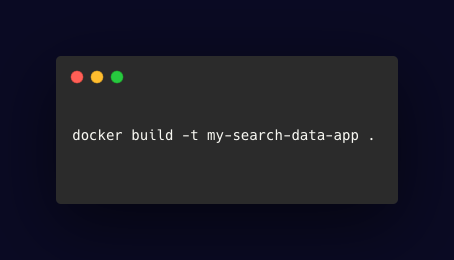

- Containerization: Package the application code, dependencies, and environment variables into a Docker container. This containerization ensures consistency across different environments.

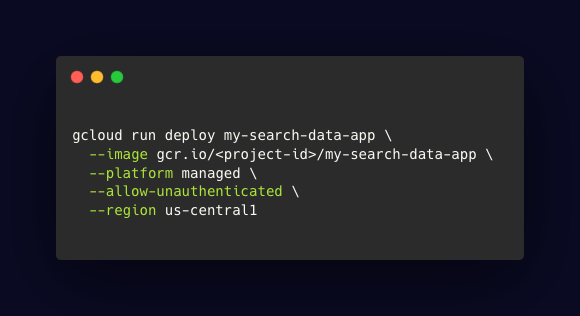

- Create a Cloud Run Service: Deploy the Docker image to Cloud Run, specifying the required resources, environment variables, and other configuration settings.

- Replace <project-id> with your actual project ID.

- The –allow-unauthenticated flag is for demonstration purposes. In production, you should implement appropriate authentication and authorization.

- Environment Variables: Set environment variables for SERP API keys, project IDs, and other sensitive information securely.

Scheduling SERP API Tasks with Google Cloud Scheduler

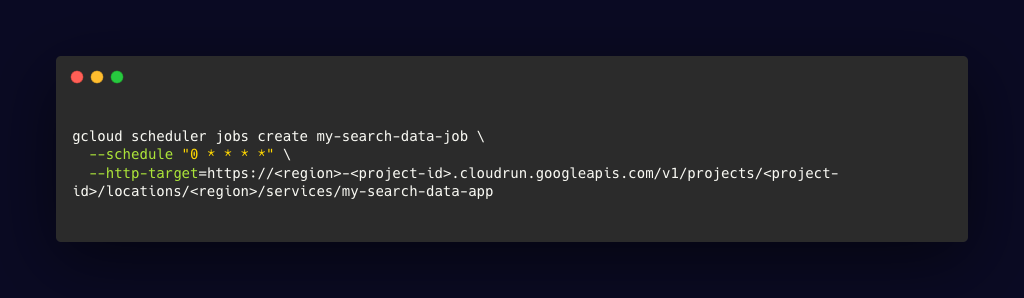

Google Cloud Scheduler allows you to automate the execution of tasks on a recurring schedule. To schedule the search data collection and ingestion process:

- Create a Cloud Scheduler Job: Define a job with the desired schedule (e.g., daily, hourly).

- Set the Target: Configure the job to invoke the Cloud Run service you deployed.

- Error Handling: Implement error handling and retry mechanisms within the Cloud Scheduler job to ensure task reliability.

Replace placeholders with your project ID and region.

Additional Considerations

- Security: Implement robust security measures, including authentication, authorization, and data encryption.

- Scalability: Consider using Cloud Run’s autoscaling features to handle varying workloads.

- Monitoring: Set up monitoring and logging to track the application’s performance and identify potential issues.

- Cost Optimization: Optimize resource utilization and leverage Cloud Run’s pricing models to control costs.

- Data Retention: Define a data retention policy for BigQuery to manage storage costs effectively.

By following these steps and incorporating best practices, you can create a reliable and scalable solution for collecting and analyzing Google search data.

Potential Business Use Cases for SERP API Data

1. Rank Tracking and Competitor Analysis

- Monitor Keyword Rankings: Track the positions of your target keywords over time to measure search engine performance.

- Identify Competitors: Analyze search results to discover your top competitors and their strategies.

- Track SERP Features: Monitor the appearance of rich snippets, featured snippets, and other SERP features to optimize your content.

2. Content Optimization

- Identify Search Intent: Understand the intent behind search queries to create highly relevant content.

- Optimize Content Structure: Analyze search results to identify common elements like headings, meta descriptions, and content length for effective optimization.

- A/B Testing: Test different content variations to determine which performs best in search results.

3. Local SEO

- Track Local Rankings: Monitor your local search rankings to measure the impact of local SEO efforts.

- Identify Local Competitors: Analyze search results to identify local competitors and their online presence.

- Optimize Google My Business: Use search result data to improve your Google My Business listing.

4. Market Research

- Identify Market Trends: Analyze search volume and keyword trends to identify emerging opportunities.

- Understand Customer Behavior: Analyze search queries to gain insights into customer needs and preferences.

- Identify Target Audience: Determine the demographics and interests of your target audience based on search data.

5. Ad Campaign Optimization

- Inform Keyword Bidding: Use search result data to optimize keyword bidding strategies for paid search campaigns.

- Identify Ad Opportunities: Find opportunities to create ad extensions or ad copy based on competitor analysis.

- Measure Ad Performance: Track the performance of your ads in relation to organic search results.

Conclusion

In today’s competitive digital landscape, leveraging the power of the SERP API is essential for businesses aiming to optimize their search engine performance. By automating Google search data collection and analysis with tools like DataForSEO API and BigQuery, you can unlock valuable insights into keyword rankings, competitor strategies, and user search behavior.

This blog has walked you through the process of setting up an automated workflow—from fetching SERP data and processing it to storing it in BigQuery for advanced analysis. With features like error handling, rate limiting, and seamless integration with Google Cloud services, this approach ensures scalability, reliability, and efficiency.

Whether you’re tracking rankings, optimizing content, or conducting market research, the SERP API empowers you to make data-driven decisions that drive growth and improve online visibility. Start automating your SEO efforts today and stay ahead in the ever-evolving world of search engine optimization.

Complete Code

You can get the complete code in this Github repository: